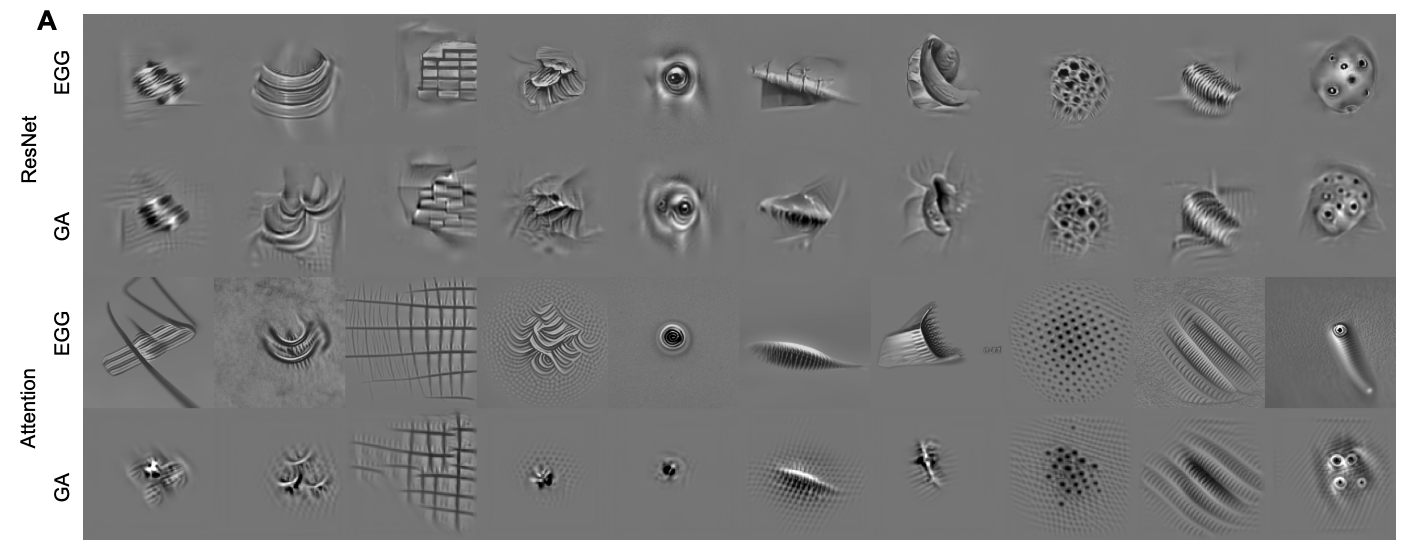

In recent years, most exciting inputs (MEIs) synthesized from encoding models of neuronal activity have become an established method for studying tuning properties of biological and artificial visual systems. However, as we move up the visual hierarchy, the complexity of neuronal computations increases. Consequently, it becomes more challenging to model neuronal activity, requiring more complex models. In this study, we introduce a novel readout architecture inspired by the mechanism of visual attention. This new architecture, which we call attention readout, together with a data-driven convolutional core outperforms previous task-driven models in predicting the activity of neurons in macaque area V4. However, as our predictive network becomes deeper and more complex, synthesizing MEIs via straightforward gradient ascent (GA) can struggle to produce qualitatively good results and overfit to idiosyncrasies of a more complex model, potentially decreasing the MEI’s model-to-brain transferability. To solve this problem, we propose a diffusion-based method for generating MEIs via Energy Guidance (EGG). We show that for models of macaque V4, EGG generates single neuron MEIs that generalize better across varying model architectures than the state-of-the-art GA, while at the same time reducing computational costs by a factor of 4.7x, facilitating experimentally challenging closed-loop experiments. Furthermore, EGG diffusion can be used to generate other neurally exciting images, like most exciting naturalistic images that are on par with a selection of highly activating natural images, or image reconstructions that generalize better across architectures. Finally, EGG is simple to implement, requires no retraining of the diffusion model, and can easily be generalized to provide other characterizations of the visual system, such as invariances. Thus, EGG provides a general and flexible framework to study the coding properties of the visual system in the context of natural images.

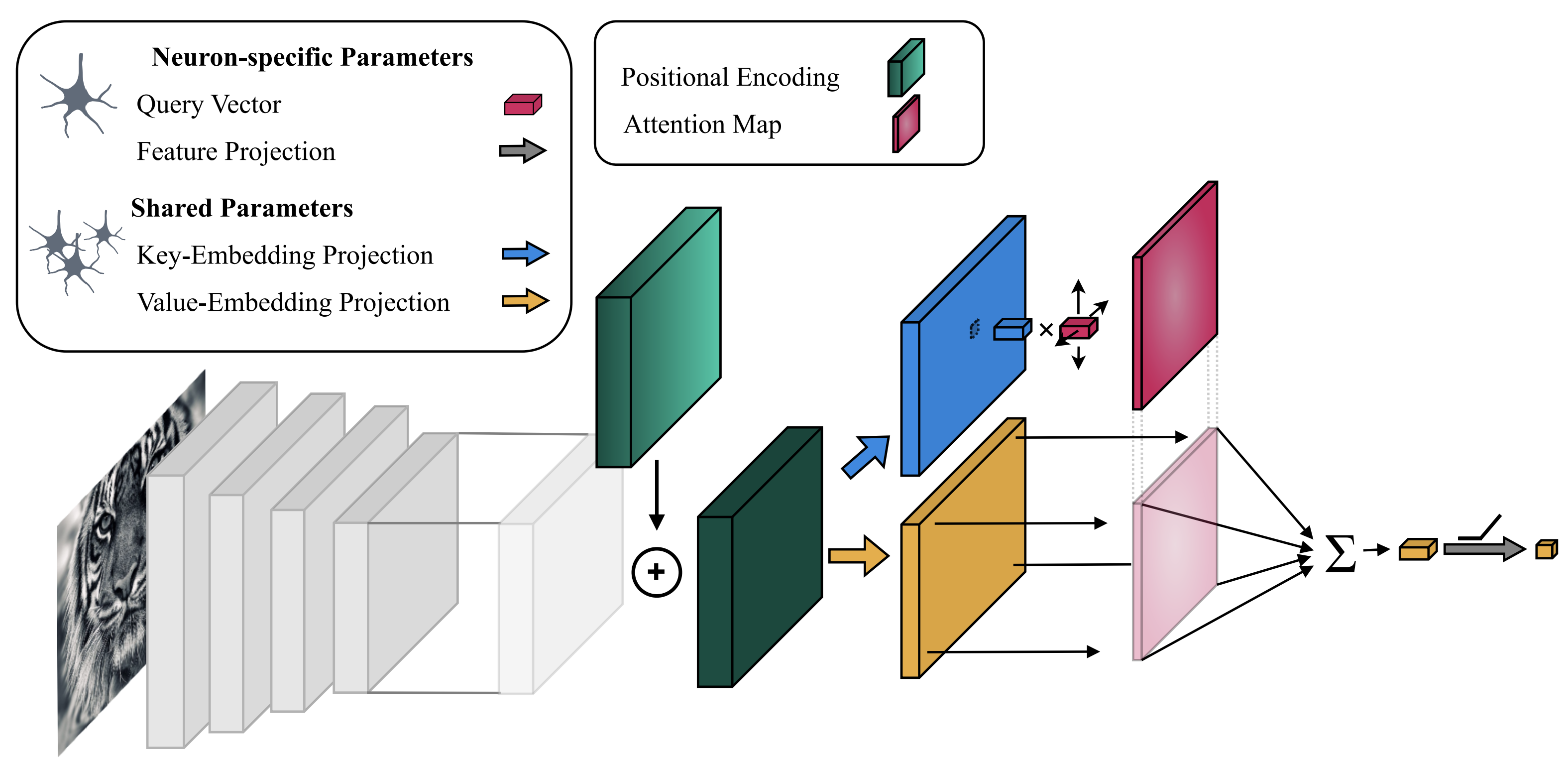

The predictive model is trained from scratch to predict the neuronal responses in an end-to-end fashion. Following Lurz et al. 2021, the architecture is comprised of two main components. First, the core, a four-layer CNN with 64 channels per layer with an architecture identical to Lurz et al. 2021. Secondly, the attention readout, which builds upon the attention mechanism as it is used in the popular transformer architecture.

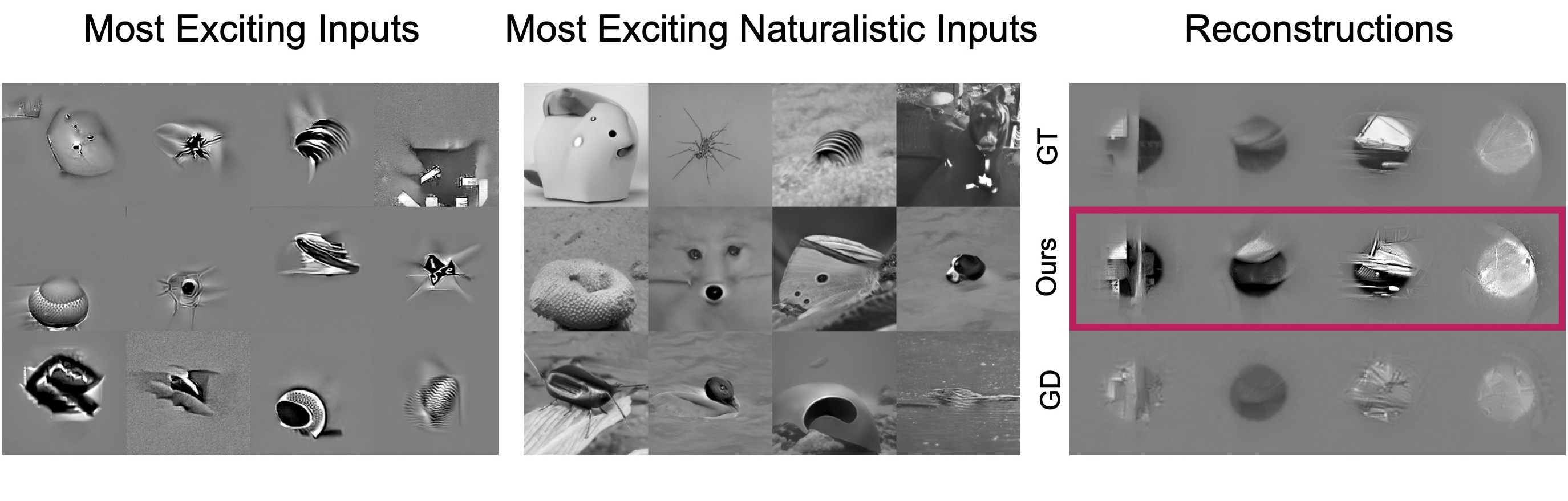

We apply EGG diffusion to characterize the properties of neurons in macaque area V4. For each of these experiments, we use the pre-trained ADM diffusion model trained on 256x256 ImageNet images. We demonstrate EGG on three tasks 1) Most Exciting Input (MEI) generation, where the generation method needs to generate an image that maximally excites an individual neuron, 2) naturalistic image generation, where a natural-looking image is generated that maximizes individual neuron responses, and 3) reconstruction of the input image from predicted neuronal responses.

@inproceedings{ pierzchlewicz2023egg,

title="Energy Guided Diffusion for Generating Neurally Exciting Images",

author="Pawel A. Pierzchlewicz, Konstantin F. Willeke, Arne F. Nix, Pavithra Elumalai, Kelli Restivo, Tori Shinn, Cate Nealley, Gabrielle Rodriguez, Saumil Patel, Katrin Franke, Andreas S. Tolias, Fabian H. Sinz",

booktitle="Advances in Neural Information Processing Systems 36",

year="2023"

}